One node vSan cluster:

Have you tried building a one node vsan cluster ? or convert exiting multi node cluster to one node. Though its not recommended by VMware, it is possible to have one node vsan cluster but with limitations like no data protection as the single node cluster is possible with only FTT 0 policy which cannot sustain any failures in terms of hard drive or complete server. To simplify, data will be lost incase of a drive or host failure. Just to give an overview about the num of components created with a specific policy, here it is for you, I am going to write a separate post to brief you about the vSan policies.

- FTT 0 = Number of components build per object is 1, and which is why this policy do not support data protection in case of a drive or server failures.

- FTT 1 = Number of components per object is 3 i.e., 2 components + 1 witness, this policy supports 1 node failure. why and how ? The 3 components are split into 3 different hosts and for any object to run, 50% of votes are required. therefore 1 node failure in the cluster indicates the reduction of component votes by 33% and other two active components keeps running with an active share of 67%

- FTT 2 = Number of components per object is 4 i.e., 3 components + 1 witness, this policy supports 2 node failure. why and how ? The 4 components are split into 4 different hosts and for any object to run, more 50% of votes are required. therefore 2 node failure in the cluster indicates the reduction of component votes by 50% and other two active components keeps running with an active share of 50%.

There are few more FTT policies like RAID 5/6, objects with size more than 255 GB, diff stripe width values and these will be discussed in separate post as mentioned earlier.

Let me quickly mention my lab environment details:

- No of hosts in vSan cluster : 3 with HA and DRS enabled - no of hosts in vCenter cluster and Sub-Cluster Member Count in esxcli vsan cluster get should match.

- No of vm's : 6 with vm size of 60 GB thin disk

- Cluster health: Healthy with no resync, no inaccessible objects and no other errors in cluster.

- Storage policies in vsan cluster : Virtual SAN Default Storage Policy (FTT 1)

Info to be collected:

- To start with, the current vm storage policies need to be checked and this can done using multiple ways, I would use powershell and yes, you can check the same using vCenter GUI but trust me this is time consuming. Command to be used for checking the storage policies of vm's as below:

get-cluster lab-cluster-1 | get-vm | get-spbmentityconfiguration | select-object Entity,Storagepolicy

3. From the above output its clear that all the vm's are mapped to Virtual SAN Default Storage Policy. Since there are only 3 hosts in cluster and moving the host into full data migration is not possible and with ensure accessibility the policy turns non-compliant.

4. Before proceeding further , ensure that all the vm's can be fit into single host .. do math in terms of cpu/memory/storage/network.

Steps to be followed:

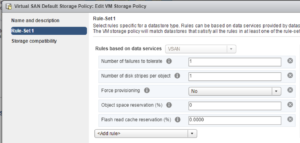

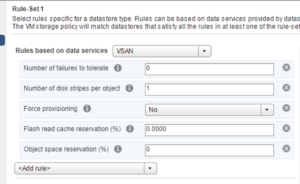

- Create a new storage policy with failures to tolerate set to 0 as shown below:

2. Now the policy is created, the existing vm's in cluster that is planned for conversion to one node has to be assigned with the new policy created and yes this can be done using single line power shell command or yes, from GUI too. I would prefer to do this with single command just to save some time.

get-cluster lab-cluster-1 | get-vm | get-spbmentityconfiguration | set-spbmentityconfiguration -storagepolicy "one node storage policy"

3. Check the storage policy using the same command used before:

get-cluster lab-cluster-1 | get-vm | get-spbmentityconfiguration | select-object Entity,Storagepolicy

4. VM's in the cluster which previously mapped to "Virtual SAN Default Storage Policy" is now mapped to "one node storage policy", with only 1 active component for each object.

5. Full data migration is now supported with this policy, so pick two hosts that had bad history in terms of hardware failures or with unique configuration.

6. Move one host into maintenance mode with full data migration, resync triggers as the data is build into other hosts.

7. After the host enters maintenance mode, take ssh to host and use the command Esxcli vsan cluster leave. This command moves the host out of vsan cluster, now just move to data center root folder or just remove it directly from cluster.

8. Repeat the steps 6 and 7 for one more host in the cluster. Cluster will now have only one host in cluster. ssh to the host that is currently part of the cluster, confirm using the command esxcli vsan cluster get , now output should show the as 1.

9. Check the vSan cluster health, inaccessible objects and resync to confirm no issues exist.

Note: Above procedure might change in case if the cluster has more than 3 hosts or stretched cluster.

Thanks for reading and let me know if you have any questions by commenting below.